Probabilistic programming, Modeling the world through Uncertainty

Published:

This is the description of workshop on probabilistic programming held on Amirkabir Artificial Intelligence Student Summit on August 2021. In this blog post you become familiar with the concepts in probabilistic programming provided with all contents and sourses you need to start with.

probabilistic programming, Modeling the world through Uncertainty

All codes and contents provided for the probabilistic programming workshop in Amirkabir Artificial Intelligence Student Summit (2021).

All codes are available here

1- Description

Workshop Level: Intermediate

Prerequisites:

Nothing but preferred to familiar with the probabilistic graphical models. If not, just being interested in Bayes theorem and Probability distributions is enough!

Syllabus:

You might hear about TensorFlow Probability. In this workshop, we discuss what it is for and in general what probabilistic programming is. To do so we model multiple problems that they need probabilistic methods. Problems that need to be solved in a way that we see their world through uncertainty. We also implement the solutions not only using TensorFlow Probability but using other packages like PyMC3.

The workshop includes:

Introduction to probabilistic programming.

Some concepts in probabilistic graphical models including variational inference and Monte-Carlo sampling.

Introduction to Tensorflow Probability and PyMC3

Model multiple probabilistic problems with these frameworks.

The Estimated Duration of your Workshop: 150 minutes

2- Probabilsitic programming

frameworks for probabilistic models definitions + automatic parameters estimation

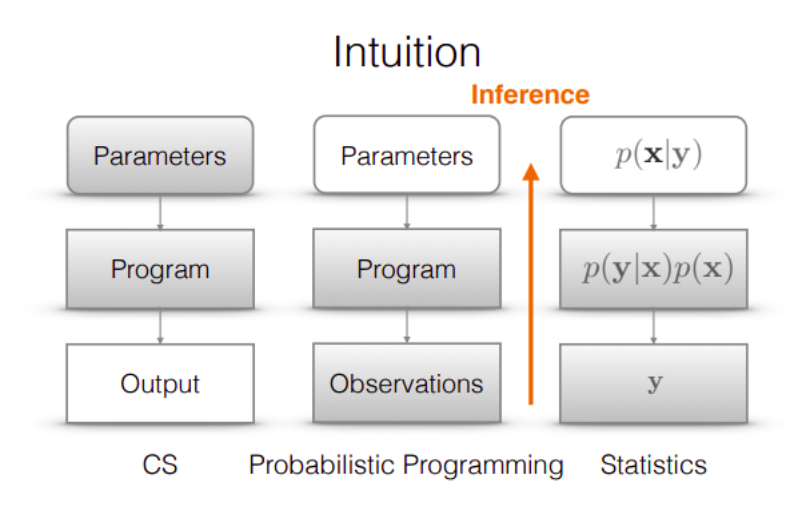

Probabilistic Programming is a programming paradigm in which probabilistic models are specified and inferences for these models are performed automatically.

It can be used to create systems that help make decisions in the face of uncertainty.

import tensorflow as tf

import tensorflow_probability as tfp

# Pretend to load synthetic data set.

features = tfp.distributions.Normal(loc=0., scale=1.).sample(int(100e3))

labels = tfp.distributions.Bernoulli(logits=1.618 * features).sample()

# Specify model.

model = tfp.glm.Bernoulli()

# Fit model given data.

coeffs, linear_response, is_converged, num_iter = tfp.glm.fit(

model_matrix=features[:, tf.newaxis],

response=tf.cast(labels, dtype=tf.float32),

model=model)

3- Uncertainty

The necessity for modeling uncertainty:

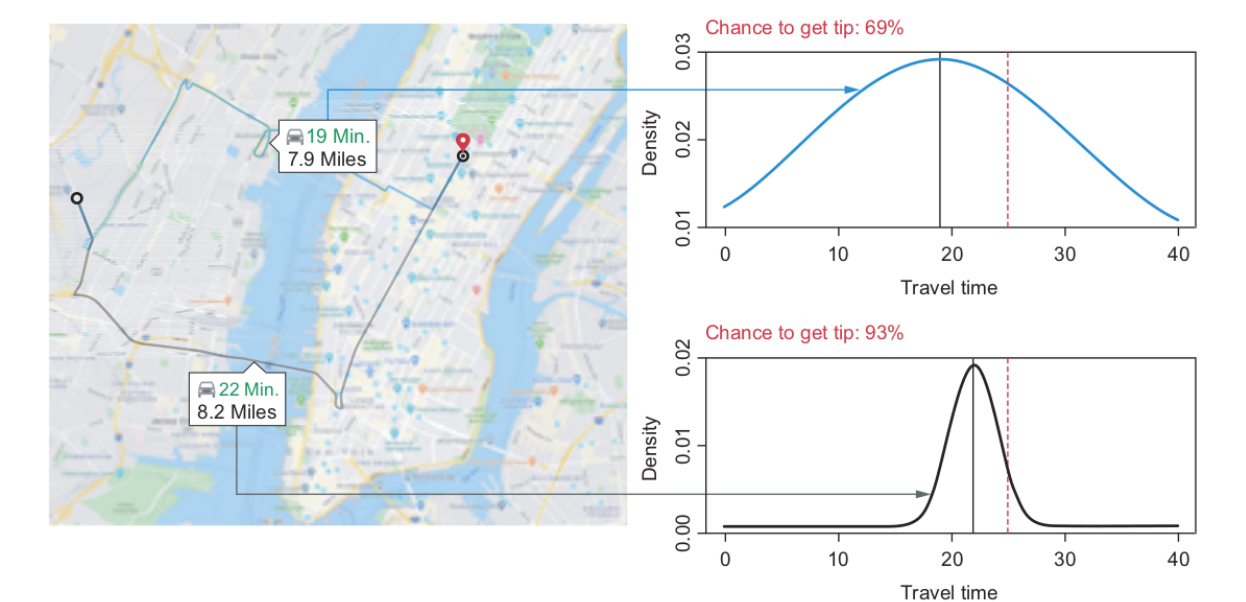

Travel time prediction of the satnav. On the left side of the map, you see a deterministic version just a single number is reported. On the right side, you see the probability distributions for the travel time of the two routes.

What is the difference between probabilistic vs non-probabilistic classification?

Probabilistic regression

3-1 Bayesian Framework

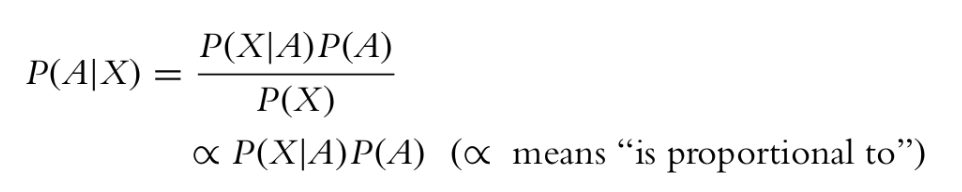

The main goal in this workshop is to compute the left part of this equation which is named Posterior Probability.

Coding

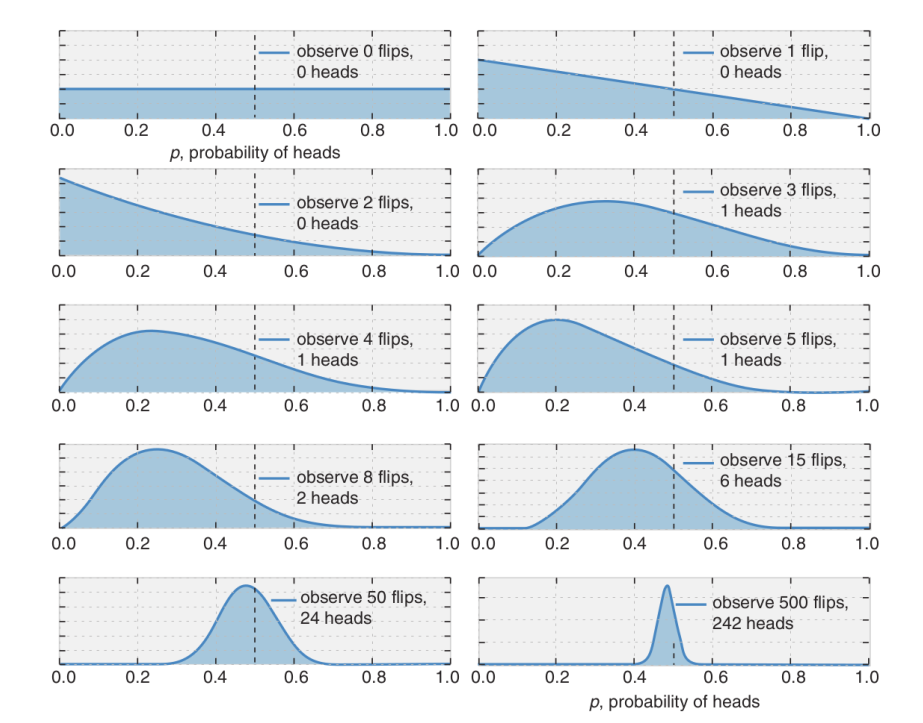

Implementation of coin-flip example to explain how to inference under the Bayesian framework.

Probabilistic Programming and Bayesian Methods for Hackers

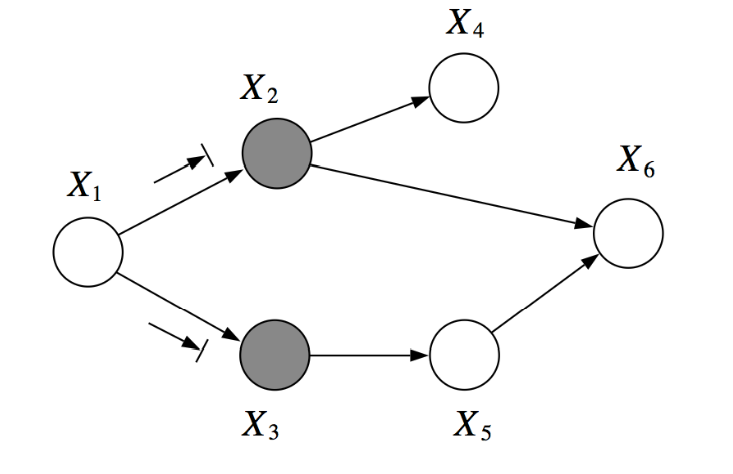

3-2 Introduction to Probabilistic Graphical models

In this part, we will discuss probabilistic graphical models. First How to represent PGM as graphs and then how to learn PGM after observing data.

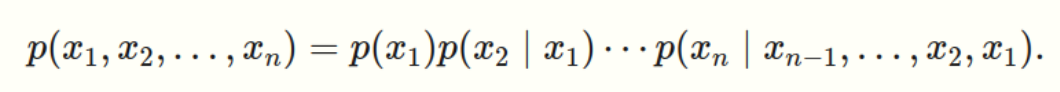

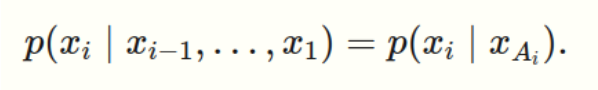

- Representation: model distributions with graphs Joint distribution with chain rule:

Conditional distribution from graph:

Graph Example:

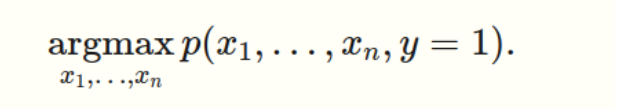

- Inference: Answer questions from distribution.

For example, finding the most probable assignment from the distribution.

Or in general learning (fit the model to a dataset): For inference, there are multiple ways including exact inference or approximation inference which is the most used approaches in probabilistic programming.

Two methods are Markov chain Monte Carlo sampling(MCMC) and The variational inference which we will discuss later and use as codes in our problems.

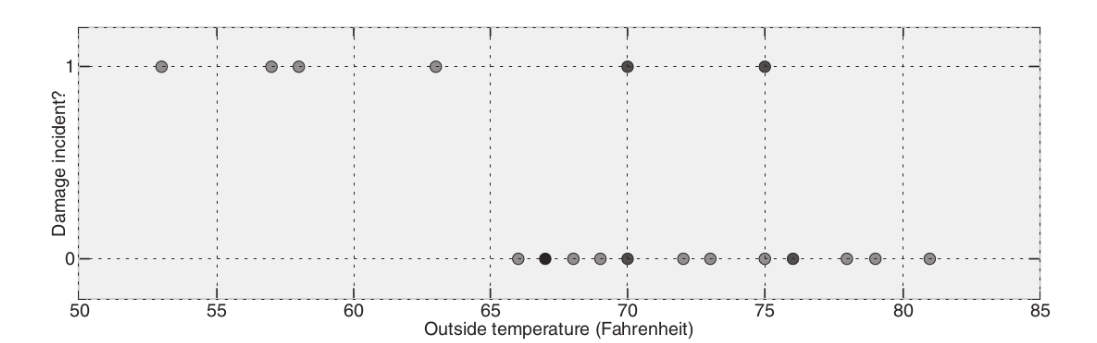

4- Our First Probabilistic Program

Space shuttle disaster:

Detect incident by temperature according to uncertainty in the data.

Coding (PyMC3)

We will model this problem using PyMC3 and MCMC methods.

Tensorflow, an introduction to probabilistic,

Probabilistic Programming and Bayesian Methods for Hackers - More Pymc3

5- Inference methods

Probabilistic methods need computing posterior probability after observing data. MCMC and variational inference are the two most common approaches. We will explain more about these two methods and compare them to understand how to choose them based on the problem.

Pyro, Stanford-cs228, pymc, probflow

5-1 markov chain monte carlo (mcmc)

Class of algorithms for sampling from a probability distribution. The more samples included from the distribution, the more closely the distribution of samples matches the actual desired distribution.

5-2 variational inference

Variational inference casts approximate Bayesian inference as an optimization problem trying to fit a simpler distribution to the desired posteriors.

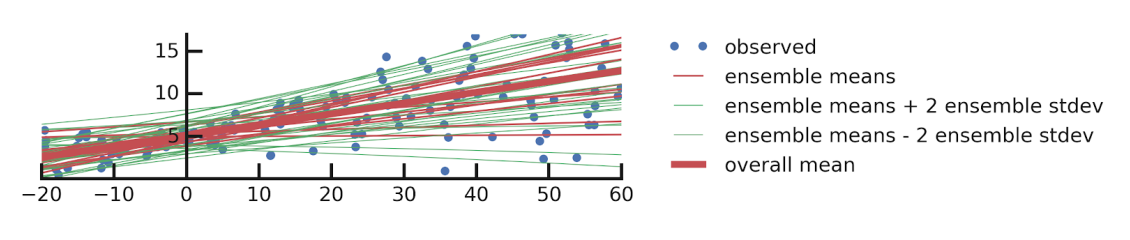

Coding (Tensorflow Probability)

In this part, we implement probabilistic regression as an alternative to classic regression. The final modeling considers uncertainty and is solved by variational inference methods using TensorFlow probability.

regression with probabilistic layers

6- Introduction to Tensorflow Probability and PyMC3

In this part, we revisit Tensorflow Probability and PyMC3 and review different parts in these frameworks including:

How to define probability distributions?

How to define priors?

How to compute posterior probability?

Coding Pymc, tensorflow-probabiltiy

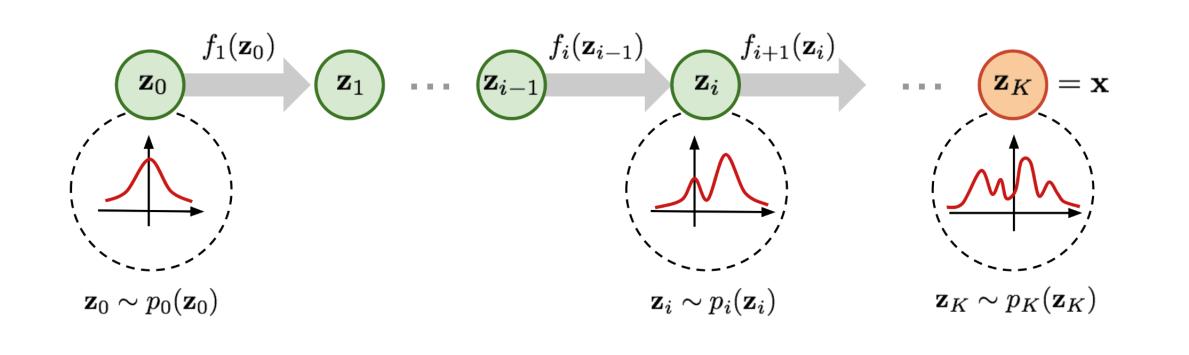

How to enrich probability distributions (Advanced)

As we discussed before there are famous probability distributions including normal distribution. Are this distribution can always model the data generation process, or can variational inference model complex distributions with just normal distribution? The answer is No! Normalizing flow is one the most common methods in probability literature to convert simpler distributions to more complex ones. Having this capability we enrich variational inference as a more accurate approximation method.

Coding (Tensorflow Probability)

variational inference with normalizing flow

variational inference and joint distribution

normalizing flow: a practical guide

Main References

[1] Davidson-Pilon, C., 2015. Bayesian methods for hackers: probabilistic programming and Bayesian inference. Addison-Wesley Professional.

[2] Duerr, O., Sick, B. and Murina, E., 2020. Probabilistic Deep Learning: With Python, Keras and TensorFlow Probability. Manning Publications.

[3] Dillon, J.V., Langmore, I., Tran, D., Brevdo, E., Vasudevan, S., Moore, D., Patton, B., Alemi, A., Hoffman, M. and Saurous, R.A., 2017. Tensorflow distributions. arXiv preprint, arXiv:1711.10604.

[4] Salvatier, J., Wiecki, T.V. and Fonnesbeck, C., 2016. Probabilistic programming in Python using PyMC3. PeerJ Computer Science, 2, p.e55.

[5] https://github.com/CamDavidsonPilon/Probabilistic-Programming-and-Bayesian-Methods-for-Hackers

[6] https://www.tensorflow.org/probability

[7] https://docs.pymc.io/

[8] https://ermongroup.github.io/cs228-notes/